Last Update: March 9, 2020

Supervised machine learning consists of finding which class output target data belongs to or predicting its value by mapping its optimal relationship with input predictors data. Main supervised learning tasks are classification and regression.

This topic is part of Regression Machine Learning with R course. Feel free to take a look at Course Curriculum.

This tutorial has an educational and informational purpose and doesn’t constitute any type of forecasting, business, trading or investment advice. All content, including code and data, is presented for personal educational use exclusively and with no guarantee of exactness of completeness. Past performance doesn’t guarantee future results. Please read full Disclaimer.

An example of supervised learning meta-algorithm is random forest [1] which consists of predicting output target feature average by bootstrap aggregation or bagging of independently built decision trees. Bootstrap aggregation or bagging is used for lowering variance error source of independently built decision trees.

1. Trees algorithm definition.

Classification and regression trees (CART) algorithm consists of greedy top-down approach for finding optimal recursive binary node splits by locally minimizing variance at terminal nodes measured through sum of squared errors function at each stage.

1.1. Trees algorithm formula notation.

Where = output target feature data,

= terminal node output target feature mean,

= number of observations,

= number of observations in terminal node.

2. Trees bagging algorithm.

Trees bagging algorithm consists of predicting output target feature of independently built decision trees by calculating their arithmetic mean. For random forests, a combination of random feature selection and bootstrap aggregation or bagging algorithms is used. Bootstrap consists of random sampling with replacement.

2.1. Trees bagging algorithm formula notation.

Where = independently built decision trees output target feature mean prediction,

= terminal node output target feature mean,

= number of independently built decision trees.

3. R script code example.

3.1. Load R packages [2].

library('quantmod')

library('randomForest')3.2. Random forest regression data reading, target and predictor features creation, training and testing ranges delimiting.

- Data: S&P 500® index replicating ETF (ticker symbol: SPY) daily adjusted close prices (2007-2015).

- Data daily arithmetic returns used for target feature (current day) and predictor feature (previous day).

- Target and predictor features creation, training and testing ranges delimiting not fixed and only included for educational purposes.

data <- read.csv('Random-Forest-Regression-Data.txt',header=T)

spy <- xts(data[,2],order.by=as.Date(data[,1]))rspy <- dailyReturn(spy)

rspy1 <- lag(rspy,k=1)

rspyall <- cbind(rspy,rspy1)

colnames(rspyall) <- c('rspy','rspy1')

rspyall <- na.exclude(rspyall)rspyt <- window(rspyall,end='2014-01-01')

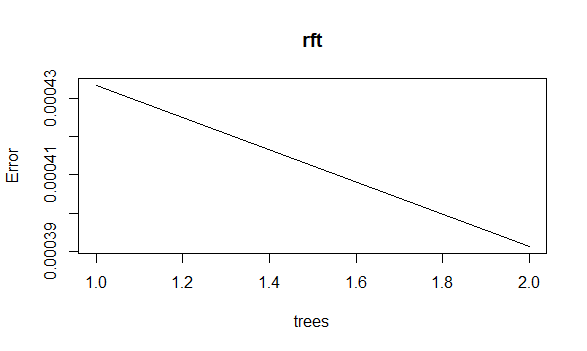

rspyf <- window(rspyall,start='2014-01-01')3.3. Random forest regression fitting and chart.

- Random forest fitting within training range.

- Random forest number of independently built decision trees, terminal node minimum size, number of input predictor features randomly sampled and bootstrap with replacement not fixed and only included for educational purposes.

- Random forest output results and chart might be different depending on bootstrap random number generation seed.

rft <- randomForest(rspy~rspy1,data=rspyt,ntree=2,nodesize=2,mty=1,replace=T)plot(rft)

4. References.

[1] L. Breiman. “Random Forests”. Machine Learning. 2001.

[2] Andy Liaw and Matthew Wiener. “Classification and Regression by randomForest”. R News. 2002.

Jeffrey A. Ryan and Joshua M. Ulrich. “quantmod: Quantitative Financial Modelling Framework”. R package version 0.4-15. 2019.